Introduction

In today’s fast-paced business world, staying ahead of the competition means leveraging cutting-edge technologies like artificial intelligence. But how do you go from an AI concept to a production-ready solution without getting lost in the complexities?

This guide will walk you through a proven three-step process for building Retrieval-Augmented Generation (RAG) systems on Amazon Web Services (AWS). Whether you’re an AI novice or a seasoned pro, you’ll learn how to:

Test solutions on a single document

Build a proof of concept (POC)

Scale to a production-ready solution

But first, let’s talk about why RAG is a game-changer for your enterprise.

The Power of RAG: Your AI’s Secret Weapon

Imagine having a brilliant assistant who not only answers your questions but also has instant access to your entire company’s knowledge base. That’s RAG in a nutshell. It enhances AI models by retrieving relevant data before generating a response, ensuring accuracy and context in every interaction.

Ready to supercharge your enterprise with AI? Let’s dive in!

Step 1: Testing Solutions on a Single Document

Goal: Validate Your AI’s Responses

Before you invest time and resources into a full-scale AI system, it’s crucial to ensure that your RAG solution delivers high-quality responses. This step is like a taste test for your AI – quick, easy, and incredibly insightful.

The Secret Ingredient: Amazon Bedrock Knowledge Base Services

We’ll use Amazon Bedrock Knowledge Base Services to get started. It’s so user-friendly, that you’ll be testing your AI in minutes – no PhD required!

Implementation: Your 5-Minute Setup Guide

Log in to the AWS console at console.aws.amazon.com

Search for “Bedrock” in the services menu

Navigate to Builder Tools > Knowledge bases > Chat with your documents

Select Claude 3 Sonnet from Anthropic as your model

Set inference parameters:

Temperature: 0 (for laser-focused enterprise responses)

Top P: 1

Response length: 2K tokens

Upload your test document

For detailed instructions, check out the Amazon Bedrock documentation.

Put Your AI to the Test

Now for the exciting part – start asking questions! Upload a document about your business and see how the AI performs. You’ll be amazed at the insights it can extract.

Pro Tip: Try asking about complex policies or procedures. The more challenging the question, the better you’ll understand your AI’s capabilities.

Why Step 1 is a Game-Changer

Speed: Get started in minutes, not months

Ease of use: No coding required – perfect for business leaders and decision-makers

Immediate value: See the potential of RAG without breaking the bank

Ready to take your AI to the next level? Let’s move on to Step 2!

Step 2: Building a Proof of Concept (POC)

Goal: Scale Up and Impress Your Stakeholders

You’ve seen the potential – now it’s time to prove it. In this step, we’ll build a POC that can handle more documents and users, giving you a real taste of what AI can do for your business.

The Dream Team: LangChain, Amazon S3, and Amazon Bedrock

We’re bringing in the big guns to make your POC shine:

LangChain & LanceDB vector storage and retrieval

Amazon S3 for document storage

Amazon Bedrock for state-of-the-art foundation models

Architecture: Your Blueprint for Success

Our POC architecture has two main components:

Data Injection: From S3 to vectors, we’ve got your data covered

Data Retrieval: Lightning-fast responses powered by cutting-edge AI

Implementation: Your Step-by-Step Guide to POC Greatness

Set up LangChain using their comprehensive documentation

Create an Amazon S3 bucket for document storage

Configure Amazon Bedrock for AI processing

Implement the data injection and retrieval pipelines

Test your shiny new POC!

Expert Insight: Pay close attention to response times and accuracy during testing. These metrics will be crucial for getting buy-in from decision-makers.

Why Your Stakeholders Will Love Step 2

Low risk, high reward: Build a working POC without breaking the bank

Tangible results: Show, don’t tell – let your stakeholders experience the AI magic firsthand

Rapid iteration: Quickly refine your system based on real user feedback

Excited to see your POC in action? Let’s scale it up in Step 3!

Step 3: Scaling to Production

Goal: Enterprise-Grade AI at Your Fingertips

This is where the magic happens. We’ll transform your successful POC into a robust, scalable solution that can handle anything your enterprise throws at it.

The Power Players: OpenSearch, Automated Pipelines, and CloudWatch

To take your RAG system to the next level, we’re upgrading key components:

Replace LangChain and S3 with Amazon OpenSearch Serverless for turbo-charged vector storage

Implement automated pipelines for seamless document processing

Use AWS CloudWatch for real-time monitoring and insights

Architecture: Built for Scale, Designed for Success

Our production architecture maintains the core strengths of the POC while supercharging its capabilities:

Data Injection: From S3 to OpenSearch Serverless, your data is always ready

Data Retrieval: Lightning-fast responses that scale with your business

Implementation: Your Roadmap to Production Domination

Deploy Amazon OpenSearch Service

Set up the turbocharged injection pipeline

Configure the enterprise-grade retrieval system

Implement AWS CloudWatch for monitoring

Test, optimize, and watch your AI solution soar!

Best Practice: Implement a phased rollout to carefully monitor performance and user adoption.

Production-Ready Considerations: Keeping Your AI Secure and Compliant

Encrypt data at rest and in transit

Implement robust access controls with AWS IAM and KMS

Deploy across multiple Availability Zones for unbeatable reliability

Why Your C-Suite Will Champion Step 3

Enterprise-ready: Robust, secure, and scalable for mission-critical applications

Cost-efficient: Pay only for what you use with OpenSearch Serverless

Future-proof: Easily adapt to growing data volumes and evolving business needs

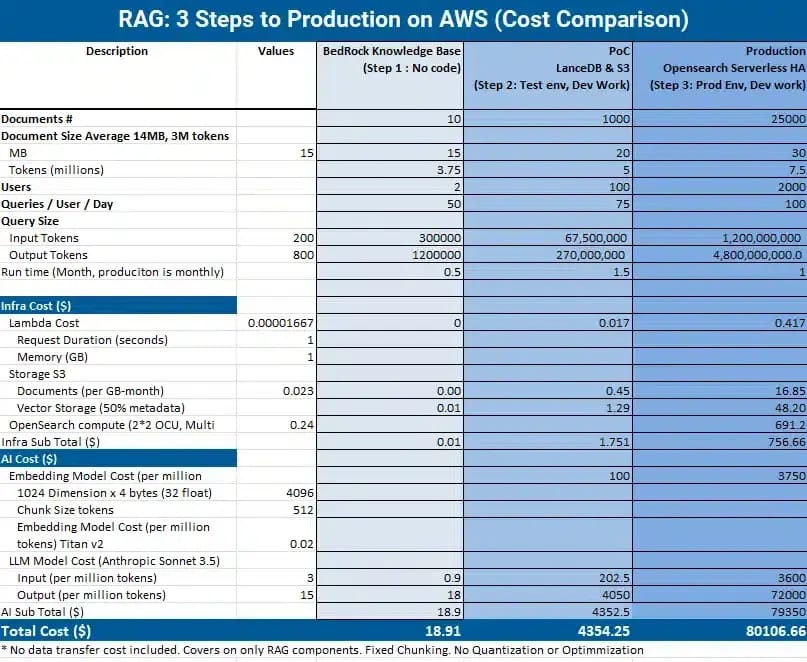

The Bottom Line: Costs and ROI

Understanding the financial impact of your RAG system is crucial for long-term success. Let’s break down the costs and potential returns at each stage:

Step 1: Dipping Your Toes

Infrastructure Cost: Negligible

AI Cost: Approximately $19 for testing

ROI: Invaluable insights and proof of concept with minimal investment

Step 2: Proving the Concept

Infrastructure Cost: $2-3 for S3 and Lambda

AI Cost: Around $4,172.50 (includes embedding and inference)

ROI: Tangible demonstration of AI capabilities, stakeholder buy-in

Step 3: Enterprise Powerhouse

Monthly Infrastructure Cost: $756.66 for OpenSearch Serverless

Monthly AI Cost: Approximately $79,350 (scales with usage)

ROI: Transformative business impact, enhanced decision-making, and competitive advantage

Pro Tip: Implement our cost optimization strategies to maximize your ROI:

Use quantization techniques

Implement smart caching

Optimize chunk sizes and embedding dimensions

Leverage model selection for different tasks