Artificial Intelligence has come a long way in a short time, but what is truly groundbreaking is the rise of AI agents – Systems that do more than just respond to a User Prompt! They reason, act, and adapt.

Thinking of building your own AI agent? Or how your existing systems can evolve to match this shift? Understanding how agentic intelligence has progressed gives clarity on what to create and why.

Check out the biggest AI agent releases of 2024 for a deeper dive into recent breakthroughs.

In this article, we explore the evolution of AI agents, how each phase has contributed to what we have today, and what the future holds. Whether you’re evaluating LLM Frameworks or planning your first agent-driven platform, these phases offer a roadmap for what’s possible.

Each phase unlocks specific design choices. From hardcoded logic to self-improving autonomy, we trace the journey that’s shaping tomorrow’s intelligent applications. So, let’s begin!

Phase 1: Scripts-Based Chatbots & Systems

The earliest form of automation mimicking intelligence emerged through script-based systems—manually written programs designed to handle specific inputs and return fixed responses. These were deterministic by nature and offered no real adaptability or understanding. Their logic trees were brittle and heavily rule-dependent as they lacked semantic awareness.

In enterprise environments, such systems were used in basic FAQ bots or transactional interfaces that operated on if-else decision trees. Tools like Dialogflow’s early rule-based mode or chatbot builders pre-2017 embodied this phase. These bots required constant maintenance and broke down when user input deviated from predefined paths.

This phase, though limiting, laid the architectural foundation for what came next. It introduced the idea of machines responding to user queries. The concept of input-response automation born here is still fundamental, though now profoundly transformed by the intelligence and autonomy brought by modern AI agents.

Phase 2: Transition to LLM-Powered Response Systems

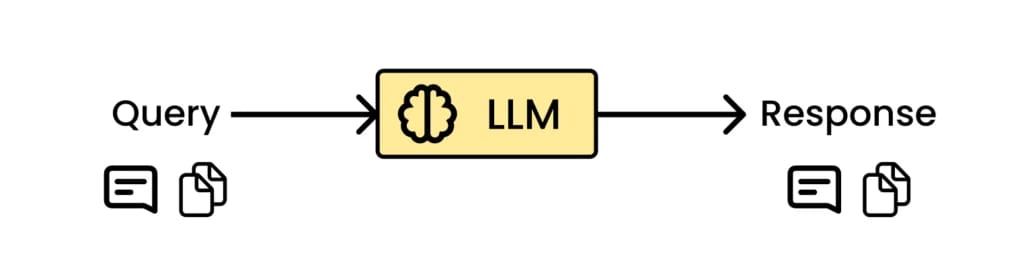

As scripting frameworks reached their functional limits, the emergence of Large Language Models (LLMs) offered a significant leap forward. Unlike static rule-based systems, LLMs introduced context-aware generation and language understanding based on probabilistic learning from massive corpora. This shift allowed AI systems to interpret nuanced inputs and respond with relevance across varied topics.

One significant advancement was the ability to handle structured documents and long-form inputs. Imagine uploading a 30-page whitepaper and asking the LLM to highlight inconsistencies or summarize the document’s key findings. That was now possible with this upgraded model. With context windows expanding to 100K+ tokens, models like GPT-4, Claude 3.5, and Gemini 1.5 could reason over full documents without truncation.

Moreover, not all LLMs are the same. From autoregressive models like GPT to Vision Language Models (VLMs) and LAMs for action planning, each serves a distinct agentic need. These models allow entire files to be reasoned over without segmentation.

Want to explore more about which type of LLM is best suited for your AI agent? Check out this breakdown to see how to choose the right model for your use case.

This phase marks the beginning of semantic comprehension and document-level intelligence, as it lays the foundation for scalable automation across enterprise content processing. It paved the way for truly contextual AI agents.

Phase 3: Task-Specific LLMs Through Third-Party API Wrappers

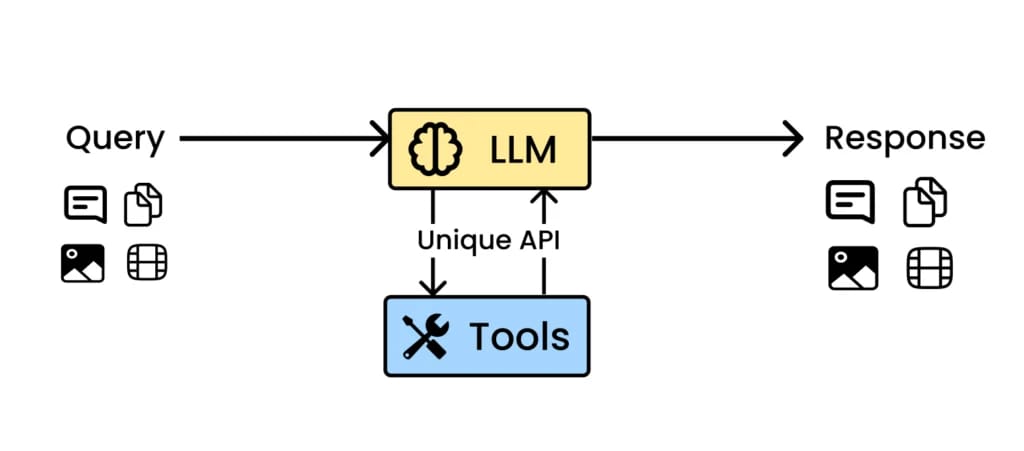

One major limitation of LLMs was their static knowledge. The solution? Let them retrieve information from external databases through third-party APIs

After integrating generic LLMs for document intelligence, the next evolution focused on delivering task-specific functionality through third-party APIs. This was not a full-blown RAG. It was an intermediate stage. LLMs were now exposed as domain-focused APIs rather than general chat endpoints.

This approach allowed teams to build thin wrappers over LLMs. These wrappers enforced scoped instructions and predictable behavior. One LLM could now act as a meeting summarizer. Another could extract policy violations. Each API served one purpose and accepted a fixed input-output contract.

These third-party APIs created structure around the otherwise open-ended nature of large models. They also improved reproducibility. Tasks that needed consistent performance, such as checklist validation or action extraction, could now be offloaded to these APIs.

Open-source projects like InstructLab and Guidance started enabling this pattern. They allowed fine-grained control of prompt templates. Commercial tools like Vellum or PromptLayer helped version, monitor, and deploy such LLM-powered endpoints.

This stage made LLMs more programmable. It allowed developers to treat AI as deterministic units of logic rather than open chatbots. It bridged the gap between plain LLMs and full autonomous agents.

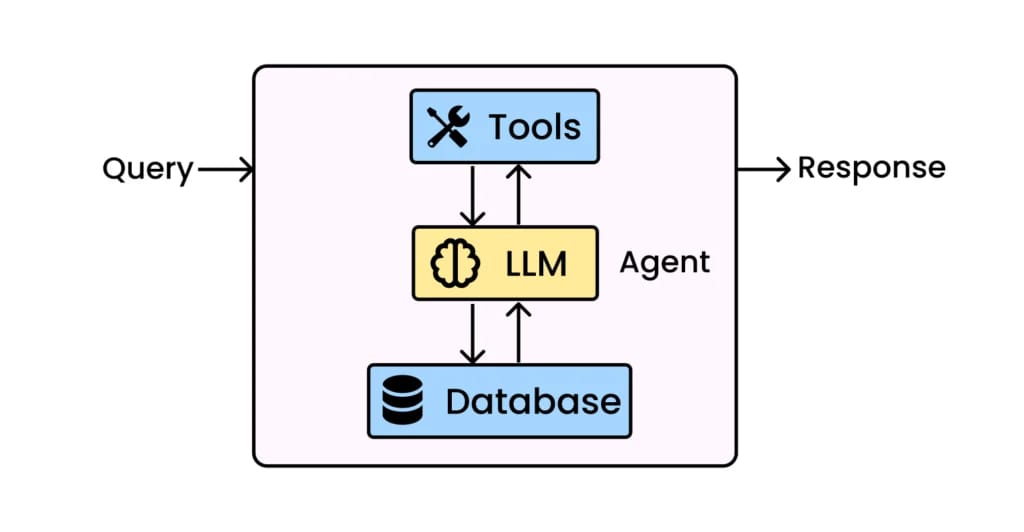

Phase 4: Transition From LLM Chatbots To RAG

The previous stage utilized third-party APIs for isolated tasks, but it lacked memory or context across inputs. There was no persistence layer. As you scale AI apps beyond simple RAG and basic prompts, the next logical step is connecting LLMs with structured and unstructured enterprise data, such as CRM, ERP, and transactional databases. This is where Retrieval-Augmented Generation (RAG) evolves into Agentic RAG, where AI agents don’t just answer but reason, plan, and make decisions using external tools and data.

This phase marks a clear shift from fixed-function APIs to reasoning-driven architectures. RAG architectures empowered models to pull live data and combine it with their trained knowledge for better responses. It enables general-purpose AI agents that interact with evolving datasets while retaining flexibility in design.

Unlike the previous approach that mainly used vector stores and APIs for retrieval and actions, this method emphasizes multi-agent workflows, decision chains, and tool usage orchestration across knowledge systems, internal APIs, and relational databases.

LLM Frameworks like LangGraph, Autogen, CrewAI, and LlamaIndex allow developers to wire up intelligent behaviors into modular and scalable agents. These frameworks act like the middleware between the LLM and your real-world data sources. You define how agents should behave, what tools they can use, and how they collaborate.

Use LangGraph for flow-controlled graphs, CrewAI for teamwork-style agents, LlamaIndex to plug in custom enterprise data, and Autogen for customizable reasoning agents. For deeper enterprise alignment, you can even explore managed frameworks like AWS Agent Squad or IBM Bee for secure and scalable agent environments.

Not every agent framework fits every use case. This LinkedIn guide provides a practical breakdown to help you choose the right one based on your needs.

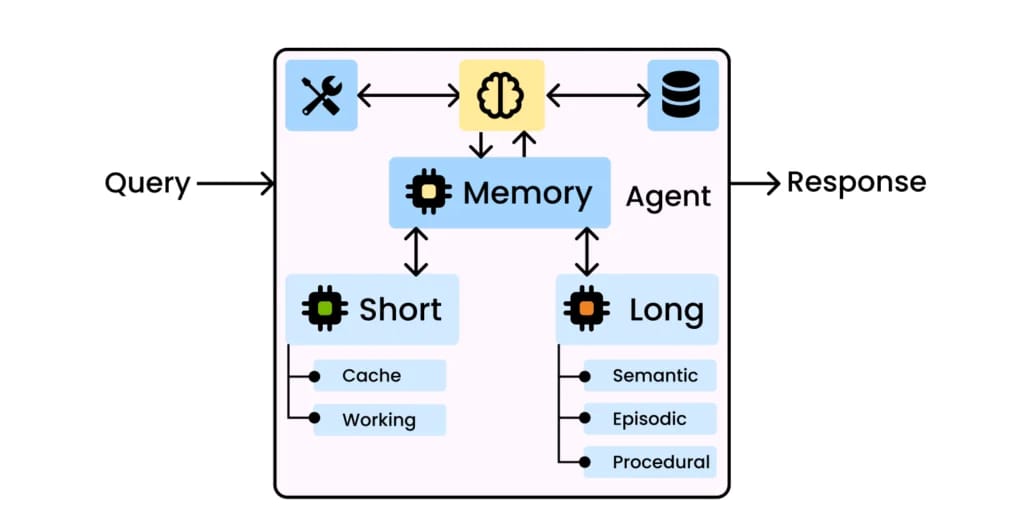

Phase 5: Shift of AI Agents From Patterned Reactions to Memory-Driven Intelligence

The previous phase of evolution focused on retrieval-augmented generation (RAG) — systems that could fetch external knowledge via tools like vector databases. Now, memory-enabled agents have moved one step further. They do not just respond based on the current context—they remember. They learn from each interaction, update themselves, and apply that experience later. This difference enables continuity and personalization in ways that reflex agents cannot achieve. In short, memory transforms an agent from a task-executor to a continuously improving thinker.

This layered architecture captures how agents manage different types of memory to simulate human-like reasoning. It separates memory into short-term and long-term forms. Short-term memory includes Working and Cache Memory, allowing rapid, temporary context retention. Long-term memory includes Episodic, Semantic, and Procedural Memory. It forms the foundation for persistent knowledge, experience, and skill. Together, these allow agents to reason not just from rules but from memory by adding depth to decisions.

🔍 Memory as the Differentiator

Episodic Memory gives agents the ability to recall past user interactions or specific events.

Semantic Memory lets them connect facts and concepts—essential for relational reasoning.

Procedural Memory builds automation from learned behavior and task repetition.

These aren’t abstract ideas. They’re implemented in tools like LangGraph, AutoGen, or CrewAI, which enable persistent agents that can recall user intents across sessions or optimize workflows based on past performance. You can explore this shift in greater depth through this LinkedIn post on AI Memory Systems that visualizes and explains the practical architecture of AI memory layers.

Phase 6: Introduction Of MCP Server For AI Agents With Better Tooling

The previous phrase introduced memory that enabled the agents to recall and learn from past interactions. But the environment was still mostly isolated — each agent worked alone, with limited tool context and no absolute interconnectivity. As agents evolve, they now need to talk to multiple systems, share context, and orchestrate diverse APIs in real time.

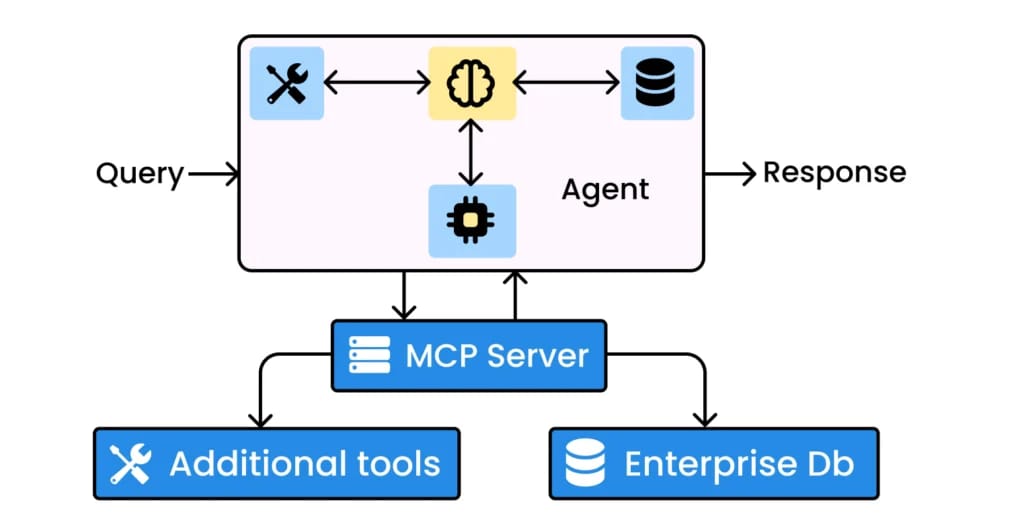

This is where MCP (Model Context Protocol) comes in. MCP offers a standardized interface for connecting AI agents to a wide variety of tools — from Google Drive to GitHub to internal databases. Instead of hardcoding tool-specific integrations, developers can now use MCP servers as modular and reusable connectors that expose tool functionality in a consistent format. Agents configured with MCP can fetch context, read documents, execute actions, or update systems while using additional tools and enterprise data.

Diagram Summary: The MCP architecture adds a context layer between the AI agent and external tools. The agent connects to one or more MCP servers. These servers expose standardized capabilities like search, create, read, or update. The AI model sees this structured interface and can reason over tools dynamically, with secure access and context-awareness built in.

MCP decouples reasoning from tool execution. This means agents can now reason about which tool to use, when to use it, and how — all through a unified protocol. It simplifies multi-step workflows, lets you scale agents across different environments, and ensures real-world actions are traceable, context-rich, and secure.

This stage marks a key shift: From passive, closed agents to connected, tool-empowered systems that can work with real data and perform meaningful tasks across the ecosystem.

Phase 7: Multi-Agent Communication and The Interoperability Protocols

The previous stage introduced the MCP server, enabling AI agents to access external tools securely. But despite that, each task was still handled by a single agent: one mind with one action path. As tasks grow in complexity and specialization, this model becomes limiting.

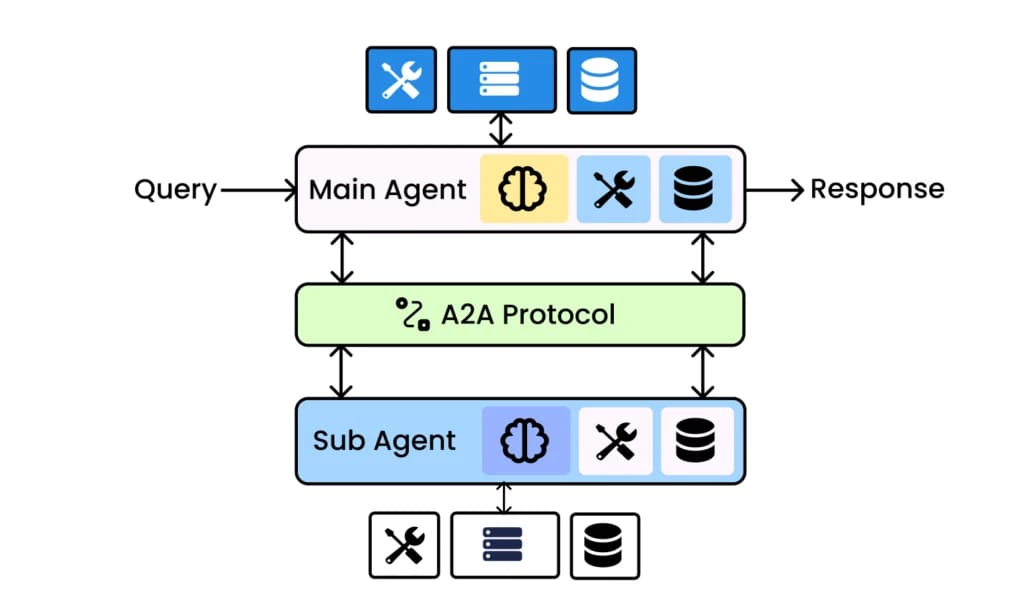

This phase introduces Multi-Agent Communication — where multiple AI agents interact to solve problems collaboratively. Each agent has a dedicated role: one may retrieve data, another may analyze it, and a third may take strategic decisions. These agents coordinate in real time, exchanging memory, tool access, and task state via shared protocols.

A key standard in this space are the Multi-Agent protocols like A2A, ACP, ANP and others, which defines how agents discover each other, share goals, exchange messages, and manage negotiation or delegation. It acts as a structured language for autonomous agents to collaborate efficiently, even across different platforms or organizations.

Diagram Summary: The diagram shows multiple LLM agents connected through an orchestration layer and communicating over A2A. Each agent performs a defined function and shares intermediate results. Together, they handle workflows that demand specialization and inter-agent coordination.

Multi-agent systems mimic human teams, each AI agent focuses on what it does best. This improves efficiency, accuracy, and scalability. With protocols like A2A and orchestration via MCP, you can now build distributed AI systems that think, debate, and act, together. This stage brings your system closer to real distributed intelligence.

Phrase 8: The Future Architecture of AI Agents

In the last phase, agents worked together to perform distinct activities. Each had its task. Coordination happened through a shared server. But there was no leader. No one agent could take full ownership or drive the whole decision tree. This phrase changes that. It adds feedback loops across all layers—input, orchestration, agent, and output—so that the system can reflect, adapt, and improve over time.

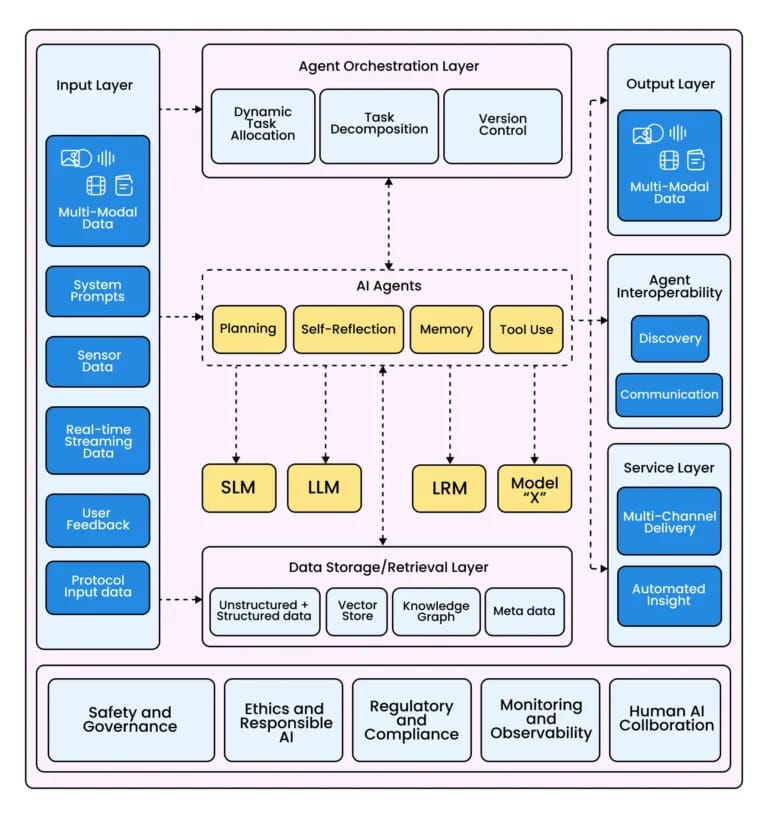

This diagram illustrates a future where AI agents function as intelligent teammates. They learn continually by adapting, coordinating, and managing complex tasks across diverse data sources and environments.

At the very beginning, we have the Input Layer, which collects multi-modal data—think images, audio, video, and text. It also listens to sensor data, real-time streams, protocols, and even user feedback. This broad spectrum of input fuels agents with enough context to perform future tasks.

Next, the Agent Orchestration Layer is the brain behind the agents’ teamwork. It handles:

Dynamic Task Allocation: Assigns tasks based on skills or capacity

Task Decomposition: Breaks significant goals into manageable chunks

Version Control: Keeps track of iterations and improvements

So, you don’t want one AI agent doing everything. This layer ensures the right agent is doing the right job at the right time.

In the heart of this system are the AI Agents, and they’re not just simple bots. They include:

Planning: Strategizes steps ahead

Self-Reflection: Evaluates performance, adjusts behavior

Memory: Remembers past decisions and outcomes

Tool Use: Calls APIs or services to solve real problems

Each of these connects to different model types, such as SLM (Small Language Models), LLM (Large Language Models), LRM (Long-context Retrieval Models), and more! These agents act with purpose!

We also see the Data Storage/Retrieval Layer to store and process Unstructured/Structured Data, Vector Stores for fast similarity search, Knowledge Graphs to understand relationships, and Metadata for context-rich retrieval.

Once tasks are done, outputs are returned in multi-modal formats (e.g., visuals, summaries, dashboards). It’s all about clear, actionable outcomes that can plug into other systems or reach the user directly.

Through the Service Layer, the AI agents communicate with other systems (discovery + API calls) and deliver insights across channels—from Slack to dashboards or IoT devices.

In short, Phrase 8 isn’t just about making smarter agents! It is about turning AI into reliable and accountable digital coworkers. These agents can plan like a strategist, adapt like a learner, act like a tool-user, and reflect like a mentor. All while staying grounded in ethical principles and data transparency.

Final Thoughts On The Future Of Autonomous AI Agents

From rigid script-based bots to self-improving multi-agent systems, we’ve walked through the primary stages in the evolution of AI agents. Each phase introduced a new capability—context awareness, memory, tool orchestration, collaboration, and finally, the future architecture of AI Agents. Together, these layers form the foundation for autonomous AI agents that can reason, act, and evolve.

If you’re looking to create AI agents or understand how to apply them in your business, it’s critical to see the whole picture—from basic design choices to long-term architecture. For a deeper, structured guide to what AI agents are and how to build them, check out our new book:

It’s packed with real-world insights, use cases, and a clear framework to move from ideas to implementation. You’ll walk away with the clarity and confidence to lead your organization into the agent-powered future.