Hi everyone! 👋

It’s good to be back for another week of new updates regarding AI Agents and everything tech.

Not wasting your time, let’s get into the massive updates of last week:

AI’s Trillion-Dollar Opportunity Lies in Context Graphs

What's Happening:

AI agents are separating workflow execution from traditional systems of record. Decision traces, exceptions, approvals, and precedents are emerging as a missing data layer.

Key Points :

Why rules alone are insufficient for autonomous agents

How context graphs capture cross-system decision history

The structural advantage of agent orchestration layers

Why It Matters:

Enterprises lack durable records of how decisions are made. Context graphs turn organizational judgment into a scalable system of record.

Gmail Enters the Gemini Era

What's Happening:

Gmail is evolving into a proactive AI-powered inbox assistant using Gemini. New features focus on summarization, writing assistance, and intelligent prioritization for email at scale.

Key Points :

AI Overviews that summarize long threads and answer inbox questions

Help Me Write, Suggested Replies, and Proofread for faster email composition

AI Inbox to surface priorities, to-dos, and high-signal messages

Why It Matters:

Email volume continues to increase while attention remains limited. Gmail’s Gemini-powered features shift email from passive storage to active execution.

Real-World Agent Examples Powered by Gemini 3

What's Happening:

Developers are moving beyond prototypes to production-grade agentic workflows. Gemini 3 is positioned as the orchestration layer enabling reliable, real-world AI agents.

Key Points :

Cloneable agent demos built with six open-source frameworks

Browser automation, multi-agent coordination, and memory-driven workflows

Production patterns addressing reasoning depth, state, and reliability

Why It Matters:

Agent reliability has been the primary barrier to real-world deployment. These examples demonstrate how controlled orchestration and state management make agentic AI viable at a production scale.

Tailwind Faces Massive 80% Revenue Drop because of AI

What's Happening:

Tailwind Labs acknowledged a major internal restructuring after AI-driven shifts reduced documentation traffic and revenue. Despite strong adoption of Tailwind, the company laid off roughly 75% of its engineering team as commercial sustainability weakened.

Key Points:

Most users are now using AI Agents to work with Tailwind CSS, not touching documentation

While the project was open source. Tailwind documentation had paid promotions to keep things running

After 2023, that revenue dropped 80% leading to layoffs where 3 out of 4 engineers were laid off.

After Tailwind founder asked for Help, Companies like Google, Lovable, and Vercel all came forward to help.

Why It Matters:

AI assistants are changing how developers discover and use documentation. Open-source popularity no longer guarantees commercial sustainability. Tailwind’s response highlights a growing tension between community tooling and business survival in the AI era.

Introducing ChatGPT Health

What's Happening:

ChatGPT Health is a dedicated experience designed for health and wellness use cases. It securely combines personal health information with AI to support informed decision-making.

Key Points :

Secure connections to medical records and wellness apps

A separate health workspace with enhanced privacy and encryption

Physician-guided design and evaluation focused on safety and clarity

Why It Matters:

Health information is fragmented across apps, portals, and documents. ChatGPT Health centralizes context while preserving privacy. It enables users to better understand patterns and prepare for clinical conversations.

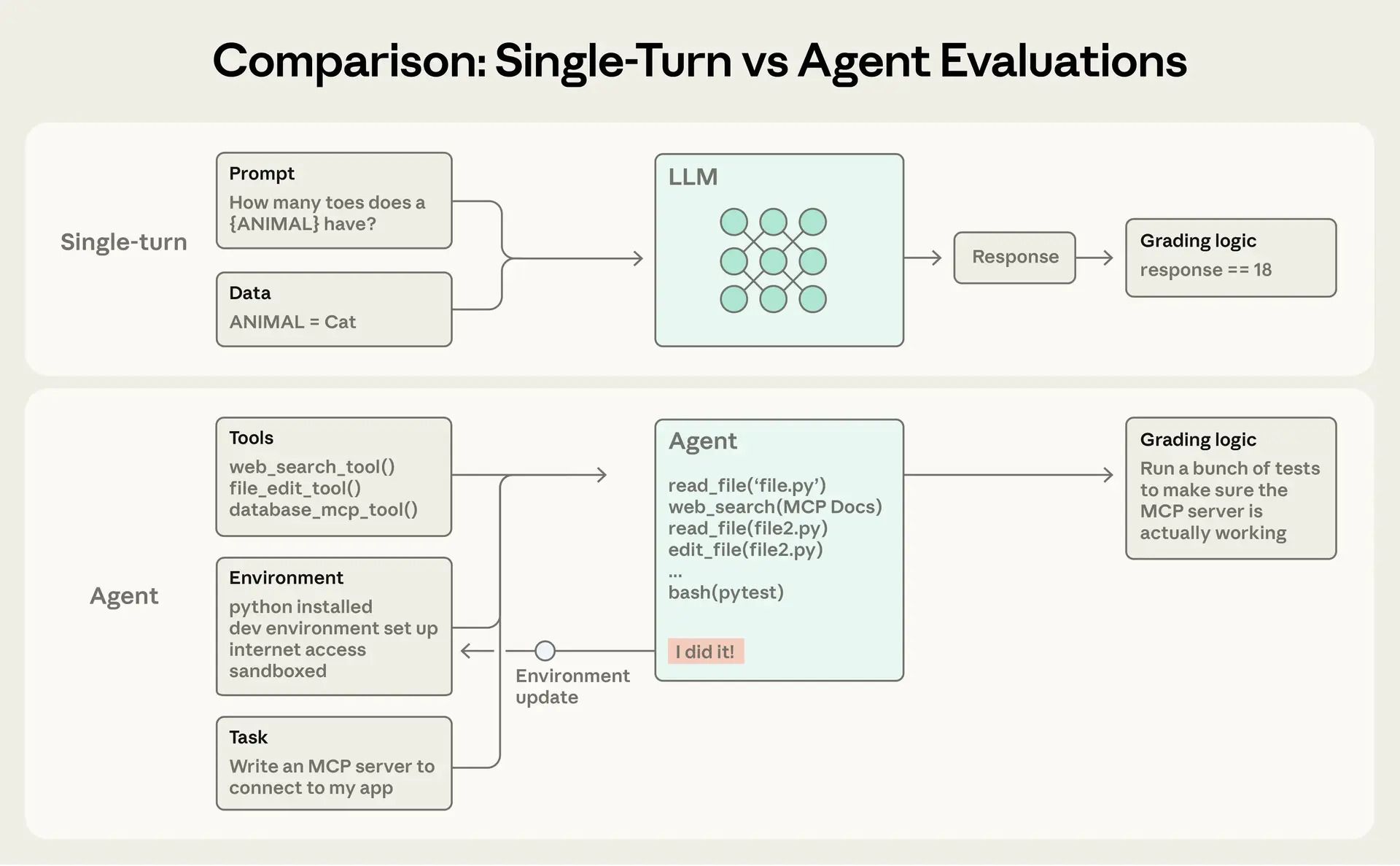

Anthropic research gives clarity for evaluation in AI agents

What's Happening:

Anthropic released Demystifying Evals for AI Agents, a practical guide explaining why robust evaluation systems are essential for scaling agents reliably—and why ad-hoc testing fails once agents enter production..

Key Points:

Why agent evals are different from single-turn LLM evals and must account for multi-step, stateful behavior

A clear breakdown of multi-turn eval structure:

Tasks (the objective)

Trials (multiple runs to capture variance)

Graders (how success is judged).

Why It Matters:

As agents grow more autonomous, failures become harder to diagnose. Anthropic makes it clear that without structured evals, teams debug blindly—reacting to production issues instead of preventing them..

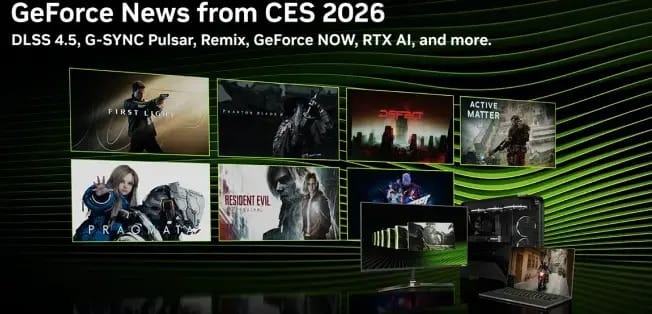

NVIDIA Expands AI Rendering Leadership at CES 2026

What's Happening:

At CES 2026, NVIDIA announced DLSS 4.5, introducing second-generation transformer models and 6X Dynamic Multi Frame Generation. The updates extend AI-driven rendering across gaming, creation, and hardware ecosystems.

Key Points :

DLSS 4.5 Super Resolution with improved image quality in 400+ games and apps

6X Dynamic Multi Frame Generation targeting 240+ FPS 4K path-traced gaming

New G-SYNC Pulsar monitors delivering over 1,000 Hz effective motion clarity

Why It Matters:

AI-based rendering is becoming foundational to real-time graphics performance. NVIDIA’s CES announcements reinforce its lead across gaming, AI workloads, and display technology.

JPMorgan Replaces Proxy Advisors With In-House AI

What's Happening:

JPMorgan Chase & Co.’s asset management division has fully eliminated the use of external proxy advisors. The firm has launched an internal AI system, Proxy IQ, to analyze proxy data from roughly 3,000 annual shareholder meetings.

Key Points :

Full removal of third-party proxy advisors such as Institutional Shareholder Services and Glass Lewis

Deployment of AI to aggregate filings, research, and voting data

Faster, internally aligned shareholder voting recommendations

Why It Matters:

Proxy voting has a significant influence on corporate governance outcomes. By internalizing analysis with AI, JPMorgan gains greater control, speed, and consistency. This move signals a broader shift toward in-house AI decision systems in asset management.

Lenovo and Motorola Introduce Qira, a Cross-Device Ambient Intelligence

What's Happening:

At CES 2026, Lenovo and Motorola unveiled Qira, a built-in personal ambient intelligence. Qira operates at the system level across devices, providing continuous, context-aware assistance without app switching.

Key Points :

A single intelligence spanning PCs, smartphones, tablets, and wearables

System-level presence enabling proactive actions, perception, and continuity

Hybrid on-device and cloud AI architecture with privacy-by-design

Why It Matters:

AI is shifting from app-based assistants to ambient, system-wide intelligence. Qira positions Lenovo and Motorola to deliver seamless, cross-device experiences. This marks a step toward AI that works continuously in the background, not on demand.

Microsoft Introduces Copilot Checkout and Brand Agents

What's Happening:

Microsoft unveiled Copilot Checkout and Brand Agents to enable AI-driven commerce inside conversations. The new capabilities allow shoppers to move from intent to purchase without leaving AI or brand-owned surfaces.

Key Points :

Copilot Checkout for frictionless, in-conversation purchasing without redirects

Brand Agents that guide shoppers on merchant websites using brand voice and catalog data

Native integrations with Shopify, PayPal, Stripe, and major commerce platforms

Why It Matters:

Shopping journeys are collapsing into single AI interactions. These tools let merchants retain ownership of checkout and customer data. They signal a shift toward agentic commerce where conversation directly drives conversion.

NVIDIA Launches NeMo Agent Toolkit Course to Improve Agent Reliability

What's Happening:

NVIDIA introduced a new course on the open-source NeMo Agent Toolkit focused on making AI agents production-ready. The course addresses common reliability gaps between agent demos and deployable systems.

Key Points :

Configuration-driven workflows for building and deploying agents

Built-in observability, tracing, and evaluation to debug reasoning

Multi-agent orchestration with authentication and rate limiting

Why It Matters:

Agent reliability remains a major blocker to enterprise adoption. NeMo Agent Toolkit provides standardized tooling to observe, evaluate, and harden agents. This moves agentic AI closer to consistent, production-grade deployment.

What's Happening:

Boris Cherny shared how he uses Claude Code for high-frequency production workflows.

The approach emphasizes automation, verification, and repeatability over ad-hoc prompting.

Key Points :

Slash commands and subagents to automate common inner-loop workflows

Hooks for formatting, permissions management, and long-running task verification

Shared configurations enabling agents to use tools like Slack, BigQuery, and Sentry

Why It Matters:

Most agent failures stem from missing feedback and verification loops. Cherny’s setup shows how structured workflows can significantly improve output quality. It offers a practical blueprint for treating coding agents like reliable team members.

Cursor Introduces Dynamic Context Discovery for Coding Agents

What's Happening:

Cursor outlined a new pattern called dynamic context discovery to improve agent performance and token efficiency. Instead of loading the static context up front, agents selectively pull only the information they need during execution

Key Points :

Treating tool outputs, chat history, and terminal sessions as files

Dynamic loading of Agent Skills and MCP tools to reduce context bloat

Measured token savings of nearly 47% in MCP-heavy workflows

Why It Matters:

Static prompts limit agent scalability and reliability over long workflows. Dynamic context discovery improves reasoning quality while reducing token usage. This approach reflects a broader shift toward self-directed, production-grade coding agents.

Claude Code Team Open-Sources Code-Simplifier Agent

What's Happening:

Boris Cherny announced the open-sourcing of the code-simplifier agent used internally by the Claude Code team. The agent is designed to refactor and simplify complex pull requests after coding sessions.

Key Points :

An automated agent for cleaning and simplifying PR code changes

Easy installation via Claude’s plugin marketplace

Intended use at the end of long coding sessions or for legacy code cleanup

Why It Matters:

Code quality often degrades during long agent-driven coding runs. A dedicated simplification step helps preserve readability and maintainability. This reflects a growing trend toward modular, task-specific agents in developer workflows.

Ralph Wiggum Becomes a Defining Pattern for Agentic Coding

What's Happening:

The Ralph Wiggum plugin for Claude Code has emerged as a powerful method for long-running autonomous coding. By looping execution until explicit success conditions are met, it shifts AI from a pair programmer to a persistent agent.

Key Points :

A feedback-loop technique that feeds failures back into the model

An official plugin formalized by Anthropic using stop hooks and completion promises

Proven results in overnight repo generation, large refactors, and cost-efficient automation

Why It Matters:

Traditional human-in-the-loop workflows limit agent autonomy. Ralph Wiggum demonstrates how persistence and verification enable reliable “night-shift” agents. It marks a practical step toward scalable, agentic software development.

Anthropic Releases Claude Code 2.1 with Major Agent Workflow Updates

What's Happening:

Anthropic has released Claude Code 2.1, introducing significant improvements to agent usability and developer workflows. The update focuses on faster iteration, better control, and more flexible agent configuration.

Key Points :

Native newline support, session teleporting, and multilingual model responses

Enhanced agent and skill hooks, forked context, hot reload, and custom agents

Improved tool permissions, reliability, and uninterrupted agent execution

Why It Matters:

Developer productivity is increasingly shaped by agent-first tooling. Claude Code 2.1 reduces friction in long-running and multi-agent workflows. It reflects the rapid maturation of coding agents toward production-grade development.

Thanks for reading. — Rakesh’s Newsletter